What is Kubernetes used for?

Kubernetes, also referred to as k8s or “kubes,” stands as a portable, extensible, open-source container orchestration platform designed for managing containerized workloads and services. Initially developed by Google based on its internal systems Borg and later Omega, Kubernetes was introduced as an open-source project in 2014 and subsequently donated to the Cloud Native Computing Foundation (CNCF). Written in Go language, Kubernetes automates container deployment, load balancing, and auto-scaling, making it a pivotal tool in modern cloud infrastructure.

What are Containers?

Containers, on the other hand, are lightweight, executable application components that encapsulate application source code along with necessary operating system (OS) libraries and dependencies. They leverage OS-level virtualization to enable multiple applications to share a single OS instance while maintaining process isolation and resource control. As a result, containers offer improved resource efficiency, portability, and scalability compared to traditional virtual machines (VMs), making them the preferred compute units for modern cloud-native applications.

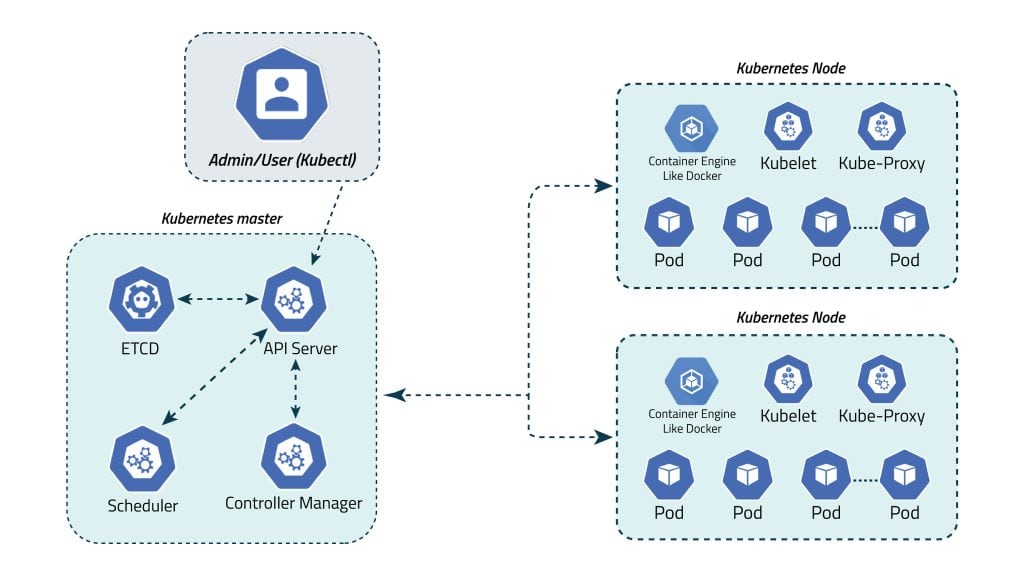

What is a Kubernetes Cluster?

A Kubernetes cluster is a functioning deployment comprising a group of Linux containers running across multiple hosts. The cluster consists of two main components: the control plane and compute machines, or nodes.

Each node, whether physical or virtual, operates within its own Linux environment and executes pods, which are comprised of containers. The control plane maintains the desired state of the cluster, including running applications and container images, while compute machines execute workloads. Kubernetes utilizes various services to automatically determine the best node for specific tasks, ensuring efficient resource allocation and workload distribution. It operates atop standard operating systems, managing containers across nodes.

The cluster’s desired state dictates which applications or workloads should be active, along with their associated configurations and resource requirements. Kubernetes abstracts container management, providing centralized control without the need for individual container or node management. Kubernetes deployments can run on various infrastructures, including bare metal servers, virtual machines, public, private, and hybrid clouds, offering versatility across different environments.

Kubernetes vs Docker

Kubernetes and Docker are often misconceived as competing choices, but they are actually distinct and complementary technologies for managing containerized applications.

Docker enables the encapsulation of all necessary components to run an application into a container, providing portability and consistency. However, as applications are containerized, there arises a need for orchestration and management, which is where Kubernetes comes into play.

Kubernetes, named after the Greek word for “captain,” serves as the orchestrator, ensuring the smooth deployment and operation of containerized applications. It’s analogous to a captain steering a ship safely through the seas, delivering containers to their destinations.

Key points to note:

- Kubernetes can work independently of Docker.

- Docker isn’t a replacement for Kubernetes; rather, it’s about utilizing Kubernetes alongside Docker to manage containerized applications effectively.

- Docker sets the industry standard for packaging and distributing applications in containers.

- Kubernetes leverages Docker for deploying, managing, and scaling containerized applications.

In essence, while Docker handles containerization, Kubernetes focuses on orchestration and management, making them complementary tools in the containerization landscape.

Kubernetes Secure Configurations

As discussed above kubernetes deployment consists of two parts. Master node a.k.a. Control Plane and a Worker node. Both of these sections have their own security recommendations as per CIS Controls. Let's discuss each of those.

Control Plane Components

These security recommendations are tailored for directly configuring Kubernetes control plane processes. However, they may not be immediately relevant for cluster operators relying on third-party management of these components.

1. Control Plane Node Configuration Files

The Control Plane Node Configuration Files play a critical role in orchestrating and managing the cluster’s operations. It is imperative to ensure the security and integrity of these configuration files by enforcing strict access controls.

Best practices dictate that these files should have permissions set to 600 or more restrictive, restricting access to only the root user, who holds the highest level of administrative privileges within the system. By limiting access to these configuration files to the root user and restricting permissions to read and write-only for the owner, organizations can mitigate the risk of unauthorized modifications or tampering.

To set the appropriate permissions and owner as described above, following commands can be used in a Linux terminal.

cd/etc/kubernetes/menifests

sudo chmod 600 kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml etcd.yaml

sudo chown root:root kube-apiserver.yaml kube-controller-manager.yaml kube-scheduler.yaml etcd.yaml

sudo chmod 600 <path/to/cni/files>

sudo chown root:root <path/to/cni/files>

cd /etc/kubernetes

sudo chmod 600 pki/ admin.conf scheduler.conf controller-manager.conf -R

sudo chown root:root pki/ admin.conf scheduler.conf controller-manager.conf -R

etcd directory should have permissions equal to 700 and must be owned by etcd user. Do it by executing the following commands in the terminal.

ps -ef | grep etcd

sudo chmod 600 /var/lib/etcd

sudo chown etcd:etcd /var/lib/etcd

This security measure helps maintain the confidentiality, integrity, and availability of the Kubernetes control plane, safeguarding against potential security vulnerabilities and ensuring the smooth and secure operation of the entire cluster.

2. API Server

It’s recommended to integrate Kubernetes with a third-party authentication provider like GitHub. This integration enhances security by offering features such as multi-factor authentication. Additionally, it ensures that changes to users, such as additions or removals, do not directly impact the kube-apiserver. Whenever feasible, aim to manage users outside the API server level. OAuth 2.0 connectors like Dex can also be utilized for this purpose.

3. Controller Manager config recommendations

This section contains recommendations relating to Controller Manager configuration flags.

What to do:

3.1 Disable Profiling:

Ensure that the –profiling argument is set to false because profiling aids in identifying performance bottlenecks but generates extensive program data that might be exploited to reveal system and program details. Unless troubleshooting or experiencing bottlenecks, it’s recommended to disable profiling to minimize the potential attack surface.

3.2 Utilize Service Account Credentials:

Ensure that the –use-service-account-credentials argument is set to true as the controller manager generates a dedicated API client with a service account credential for each controller loop. Enabling –use-service-account-credentials ensures that control loops operate with minimum permissions required for their tasks, especially when combined with RBAC.

3.3 Bind Address Configuration:

Ensure that the –bind-address argument is set to 127.0.0.1 as the Controller Manager API service running on port 10252/TCP, used for health and metrics information, is available without authentication or encryption. Binding it solely to a localhost interface minimizes the cluster’s attack surface.

How to do:

Edit the Controller Manager pod specification file /etc/kubernetes/manifests/kube-controller-manager.yaml on the Control Plane node.

Set the parameters accordingly:

–profiling=false

–use-service-account-credentials=true

–bind-address=127.0.0.1

4. Scheduler config recommendations

This section contains recommendations relating to Scheduler configuration flags.

What to do:

4.1. Disable Profiling:

Ensure that the –profiling argument is set to false as profiling aids in identifying performance bottlenecks but generates extensive program data that might be exploited to reveal system and program details. Unless troubleshooting or experiencing bottlenecks, it’s recommended to disable profiling to minimize the potential attack surface.

4.2. Bind Address Configuration:

Ensure that the –bind-address argument is set to 127.0.0.1 as the Scheduler API service running on port 10251/TCP, utilized for health and metrics information, is available without authentication or encryption. Binding it solely to a localhost interface minimizes the cluster’s attack surface.

How to do:

Edit the Scheduler pod specification file /etc/kubernetes/manifests/kube-scheduler.yaml on the Control Plane node.

Set the parameters accordingly:

–profiling=false

–bind-address=127.0.0.1

Securing etcd

etcd serves as a critical Kubernetes component responsible for storing states and secrets. It requires special protection due to its pivotal role in the cluster. Write access to the API server’s etcd is tantamount to gaining root access over the entire cluster, while even read access can facilitate privilege escalation.

The Kubernetes scheduler relies on etcd to locate pod definitions lacking a node assignment. It then dispatches these pods to an available kubelet for scheduling. Since the API server validates submitted pods before writing them to etcd, malicious users directly writing to etcd can circumvent several security mechanisms, such as PodSecurityPolicies. Administrators must enforce robust authentication mechanisms between the API servers and etcd servers, employing measures like mutual authentication via TLS client certificates. Furthermore, it’s advisable to isolate etcd servers behind a firewall accessible solely by the API servers.

To enhance etcd security, adhere to the etcd service documentation and configure TLS encryption. Subsequently, edit the etcd pod specification file located at /etc/kubernetes/manifests/etcd.yaml on the master node and configure the following parameters accordingly.

–cert-file=</path/to/ca-file>

–key-file=</path/to/key-file>

–client-cert-auth=”true”

–trusted-ca-file=</path/to/ca-file>

Limiting access to the primary etcd instance

Allowing other components within the cluster to access the primary etcd instance with read or write access to the full keyspace is equivalent to granting cluster-admin access. Using separate etcd instances for other components or using etcd ACLs to restrict read and write access to a subset of the keyspace is strongly recommended.

Control Plane Configuration

This section contains recommendations for cluster-wide areas, such as authentication and logging. Unlike section 1 these recommendations should apply to all deployments.

1. Authentication and Authorization

Authentication and authorization form Kubernetes’ primary defense against attackers, limiting and securing access to API requests controlling the Kubernetes platform. Authentication (login) must precede authorization (permission to access), with Kubernetes expecting common attributes in REST API requests. Existing organization-wide or cloud-provider-wide access control systems can handle Kubernetes authorization. Kubernetes defaults to denying permissions for API requests, evaluating request attributes against policies to allow or deny access. All parts of an API request must align with established policies to proceed.

External API Authentication for Kubernetes (RECOMMENDED)

- Due to Kubernetes’ internal API authentication vulnerabilities, larger or production clusters are advised to employ external API authentication methods.

- OpenID Connect (OIDC) enables externalized authentication, employs short-lived tokens, and leverages centralized groups for authorization.

- Managed Kubernetes distributions like GKE, EKS, and AKS support authentication using credentials from their respective IAM providers.

- Kubernetes Impersonation facilitates externalized authentication for managed cloud clusters and on-premises clusters without direct access to API server configuration parameters.

- Additionally, API access should be treated as privileged and utilize Multi-Factor Authentication (MFA) for all user access.

2. Logging

- Ensure that audit logging is enabled, monitoring unusual or unwanted API calls, particularly authentication failures which display a “Forbidden” status message. Failure to authorize may indicate attempted use of stolen credentials.

- Use the –audit-policy-file flag when passing files to kube-apiserver to activate audit logging, defining which events should be logged-options include None, Metadata only, Request (metadata and request), and RequestResponse (metadata, request, and responses).

- Managed Kubernetes providers offer access to this data in their consoles and can establish notifications for authorization failures.

Worker Nodes

This section consists of security recommendations for the components that run on Kubernetes worker nodes. Note that these components may also run on Kubernetes master nodes, so the recommendations in this section should be applied to master nodes as well as worker nodes where the master nodes make use of these components.

1. Worker Node Configuration Files

This section provides recommendations for configuring files on the worker nodes to facilitate an Automated Audit using CIS-CAT. The following parameters must be set on each node being evaluated:

- kubelet_service_config

- kubelet_config

- kubelet_config_yaml

In a kubeadm environment, the default settings for these values are needed to be set as follows:

export kubelet_service_config=/etc/systemd/system/kubelet.service.d/10-kubeadm.conf

export kubelet_config=/etc/kubernetes/kubelet.conf

export kubelet_config_yaml=/var/lib/kubelet/config.yaml

Ensure that the permissions of the following service files are set to 600 and owned by root.

Reason:

The kubelet, kube-proxy and kubelet.conf service file controls various parameters that set the behavior of the kubelet service in the worker node. You should restrict its file permissions to maintain the integrity of the file. The file should be writable by only the administrators on the system.

Solution:

Permissions should be set to 600 and the file must be owned by user root. This can be done with the following commands.

chmod 600 /etc/systemd/system/kubelet.service.d/10-kubeadm.conf <proxy kubeconfig file>/etc/kubernetes/kubelet.conf

chown root:root /etc/systemd/system/kubelet.service.d/10-kubeadm.conf <proxy kubeconfig file>/etc/kubernetes/kubelet.conf

The location of the proxy kubeconfig file can be searched by typing ps -ef | grep kube-proxy in the Linux terminal.

2. Kubelet

The kubelet, functioning as an agent on each node, interacts with the container runtime to manage pod deployment and report metrics for nodes and pods. It exposes an API on each node, enabling operations like starting and stopping pods. Unauthorized access to this API, if obtained by an attacker, could compromise the entire cluster.

To bolster security and reduce the attack surface, consider the following configuration options for locking down the kubelet:

- Disable anonymous access with –anonymous-auth=false to ensure unauthenticated requests receive an error response. The API server needs to authenticate itself to the kubelet via –kubelet-clientcertificate and –kubelet-client-key

- Set -authorization mode to a value other than AlwaysAllow to enforce request authorization. By default, tools like kubeadm configure this as a webhook, triggering kubelet calls to the API server for authentication.

- Include NodeRestriction in the API server –admission-control setting, to limit kubelet permissions. This confines kubelet operations to pods associated with its own node object.

- Set –read-only-port=0 to close read-only ports. This prevents anonymous users from accessing information about running workloads. This port does not allow hackers to control the cluster, but can be used during the reconnaissance phase of an attack.

- Turn off cAdvisor, which was used in old versions of Kubernetes to provide metrics, and has been replaced by Kubernetes API statistics. Set –cadvisor-port=0 to avoid exposing information about running workloads.

All the above flags should be set in the /etc/kubernetes/kubelet.conf file.

Policies

1. Role Based Access to Kubernetes

Implementing Role-Based Access Control in Kubernetes

Role-based access control (RBAC) is a crucial method for managing access to resources within Kubernetes, allowing organizations to regulate access based on user roles. Fortunately, Kubernetes integrates RBAC as a built-in component, providing default roles to define user responsibilities based on desired actions.

RBAC matches users or groups to permissions associated with roles, combining verbs (such as get, create, delete) with resources (e.g., pods, services, nodes). These permissions can be scoped to namespaces or clusters, offering flexibility in access control. RBAC authorization leverages the rbac.authorization.k8s.io API group, enabling dynamic configuration of policies via the Kubernetes API.

To enable RBAC, initiate the API server with the –authorization-mode flag set to a comma-separated list including RBAC. For instance:

kube-apiserver –authorization-mode=Example,RBAC –other-options –more-options

By utilizing RBAC alongside the Node and RBAC authorizers in conjunction with the NodeRestriction admission plugin, organizations can enforce granular access controls and enhance security within their Kubernetes clusters.

2. Pod Security Standards

Kubernetes Pod Security Standards are crucial guidelines designed to uphold container security and integrity within Kubernetes clusters. These standards delineate three distinct profiles with varying restrictions to ensure that containerized workloads remain secure against known privilege escalations and adhere to current best practices for Pod hardening:

- Privileged: Offers an unrestricted policy.

- Baseline: Provides minimally-restrictive guardrails while preventing known privilege escalations.

- Restricted: Adheres to current Pod hardening best practices but may limit compatibility.

Compliance with these standards ensures that containerized applications meet industry-standard security requirements within Kubernetes environments. Kubernetes includes a built-in Pod Security admission controller to assess Pods’ isolation levels against these Pod Security Standards.

Once the Pod Security admission controller is enabled and the webhook installed, we can configure the admission control mode in our namespaces:

- Enforce: Rejects Pods with policy violations.

- Audit: Allows Pods with policy violations but includes an audit annotation in the audit log event record.

- Warn: Allows Pods with policy violations but warns users.

Configuring the Pod Security admission controller involves assigning two labels:

- Level: Privileged, baseline, or restricted.

- Mode: Enforce, audit, or warn.

Furthermore, multiple security checks can be set on any namespace. Administrators can specify the version and check against the policy shipped with that specific Kubernetes minor version. For example, to identify if the namespace “my-namespace” doesn’t meet the latest baseline version of the Pod Security Standards, the following warning can be set up:

kubectl label –overwrite ns my-namespace pod-security.kubernetes.io/warn=baseline pod-security.kubernetes.io/warn-version=latest

By adhering to and implementing these Pod Security Standards, Kubernetes environments can bolster their security posture and mitigate potential risks associated with containerized workloads.

3. Controlling Network Access to Sensitive Ports

Configuring authentication and authorization on both the cluster and cluster nodes is highly recommended. Kubernetes clusters typically listen on a range of well-defined and distinctive ports, making it easier for attackers to identify and potentially exploit vulnerabilities.

Here’s an overview of the default ports used in Kubernetes, along with recommendations to enhance security:

Control plane node(s):

| Protocol | Port Range | Purpose |

| TCP | 6443 | Kubernetes API Server |

| TCP | 2379-2380 | etcd server client API |

| TCP | 10250 | Kubelet API |

| TCP | 10251 | kube-scheduler |

| TCP | 10252 | kube-controller-manager |

| TCP | 10255 | Read-Only Kubelet API |

Worker Nodes:

| Protocol | Port Range | Purpose |

| TCP | 10250 | Kubelet API |

| TCP | 10255 | Read-Only Kubelet API |

| TCP | 30000-32767 | NodePort Services |

To enhance security:

- Network access to these ports should be restricted, and serious consideration should be given to limiting access to the Kubernetes API server to trusted networks.

- Employ firewall rules or network policies to control traffic to and from these ports.

- Regularly review access controls and adjust them as necessary to minimize the attack surface.

By carefully controlling network access to sensitive ports, organizations can significantly reduce the risk of unauthorized access and potential security breaches within their Kubernetes environments.

Secure Kubernetes Secrets:

Kubernetes secrets contain sensitive information such as API keys or passwords, necessitating secure storage and transmission practices. To enhance security:

Encryption at Rest and in Transit:

Ensure that secrets are encrypted both at rest and in transit. Utilize encryption mechanisms provided by Kubernetes or external encryption solutions to safeguard secrets from unauthorized access.

Avoid Embedding Secrets into Container Images:

Refrain from embedding secrets directly into container images. Storing secrets within images poses a significant security risk, as attackers can easily extract them. Instead, leverage Kubernetes secrets management to securely inject secrets into pods at runtime.

Implement Access Controls:

Enforce strict access controls to restrict access to secrets only to authorized entities. Leverage Kubernetes RBAC (Role-Based Access Control) and network policies to control access to secrets and limit exposure to potential threats.

Regularly Rotate Secrets:

Implement a robust secret rotation policy to regularly update and refresh secrets. Periodically rotating secrets mitigates the risk of unauthorized access and minimizes the impact of potential security breaches.

Monitor and Audit Secret Access:

Implement monitoring and auditing mechanisms to track access to secrets and detect any unauthorized or suspicious activities. Monitoring tools and logging frameworks can provide insights into secret usage and help identify potential security incidents.

By adhering to these best practices, organizations can effectively secure Kubernetes secrets, mitigating the risk of unauthorized access and safeguarding sensitive information from potential security threats.

Key Takeaways

Securing cloud-native applications and the underlying infrastructure demands a transformative shift in an organization's security strategy. It necessitates the implementation of controls at earlier stages of the application development life cycle, leveraging built-in controls to enforce policies that mitigate operational and scalability challenges, and keeping pace with accelerated release schedules.

CalCom Hardening Suite streamlines the implementation of security configuration requirements outlined in the CIS benchmarks, ensuring robust protection and compliance across systems.